Running a SaaS business often means dealing with repetitive tasks and limited customization options. These challenges can slow down growth and frustrate your team. If you’re a SaaS owner looking to streamline operations, AI, specifically Large Language Models (LLMs), could be the solution you need.

LLMs have the potential to transform how your SaaS operates, automating tasks and freeing up valuable time. However, while the benefits are clear, implementing LLMs can be tough. You’ll need significant computing resources, and optimization to make them work effectively.

This is where Hugging Face Hub comes into play. It offers a user-friendly platform that simplifies access to a wide range of LLMs, making it easier to integrate AI into your SaaS hassle-free.

In this article, we’ll explore the top 10 LLM models available on Hugging Face that are ideal for SaaS businesses. By the end, you’ll have a clear understanding of which models best suit your needs, helping you make an informed decision that can drive your business forward.

Benefits of LLM Models for SaaS Platforms

LLMS are deeply transforming how SaaS platforms operate, offering significant advantages that can drive innovation. By understanding how these models work and the specific benefits they bring, SaaS companies can leverage them to stay competitive and responsive to customer needs.

Scalability

One of the standout benefits of LLMs for SaaS platforms is their scalability. For example, if your SaaS product supports a field service organization managing technicians across different regions, an LLM can effortlessly scale to meet fluctuating demands.

Whether it’s handling a spike in customer inquiries, providing real-time support to technicians, or maintaining extensive chat histories, these models adapt quickly. This flexibility helps your service remain productive, even during peak periods, making it easier to manage high volumes of interactions without compromising quality.

Security

Security is another critical aspect when integrating LLMs into SaaS platforms. Given the sensitive nature of the data that field technicians and other users might handle—such as customer information and proprietary business data, it becomes vital to make sure that LLMs are deployed securely. SaaS companies must prioritize features like data encryption, access controls, and regular security audits to safeguard against vulnerabilities.

This ensures that while the LLM improves your functionality, it doesn’t compromise the security and privacy of the data it processes.

Accessibility

Accessibility is also a key benefit of using LLMs in SaaS platforms. Not all users are AI experts, and that’s where LLMs shine. For example, a technician in the field could use an LLM-powered chat interface on their mobile device to troubleshoot issues.

They can describe problems in plain language and receive detailed guidance, retrieve equipment manuals, or get real-time advice without needing any specialized training. This user-friendly approach not only boosts productivity but also increases the adoption of AI tools within your SaaS product.

Book a demo with Fungies.io to explore how our subscription management solutions can integrate seamlessly with advanced AI capabilities. Empower your team with scalable, secure, and accessible tools that drive innovation and keep your business ahead of the competition.

How We Picked the Best LLM Models for SaaS Businesses on Hugging Face

When selecting the best LLM models for SaaS businesses on Hugging Face, we focused on key factors to ensure each model aligns with the specific needs of a SaaS platform:

- Integration Capabilities: Ensuring the model can be integrated with minimal disruptions and quick deployment.

- Scalability: Evaluating the model’s ability to handle growing user bases and fluctuating demands without sacrificing performance.

- Costs and Affordability: Considering the overall value of the model to ensure it fits within your budget.

By balancing these factors, we identified the top 10 HuggingFace LLM models that offer the best fit for SaaS businesses.

10 Best LLM Models on Huggingface

Here is a comprehensive breakdown of the 10 top LLM models you should consider for your business, some of which fall into the category of hugging face large language model.

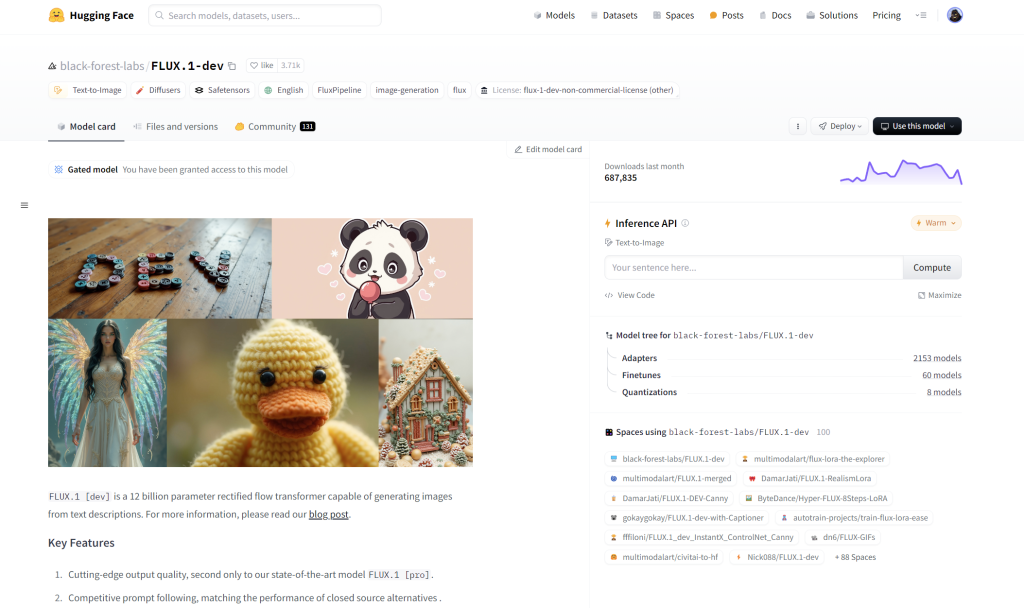

FLUX.1-dev is a modern LLM model designed to assist you with AI-driven image generation with great visual quality and prompt responsiveness. It is ideal for creative professionals and developers and simplifies the process of producing detailed, high-quality images through advanced AI algorithms.

Whether you’re in design, art, or software development, this model offers a powerful platform to bring your creative ideas to life with precision and variety.

Why is This LLM Model Best for SaaS?

Since FLUX.1-dev excels in image generation, this makes it an excellent choice for SaaS businesses involved in creative tasks. Its versatility allows it to cater to both commercial and non-commercial applications, providing the flexibility and scalability that SaaS companies need.

Some of its key features include:

- Designed for efficiency, FLUX.1-dev is available for non-commercial use, offering similar capabilities as its pro version.

- This model comes with high-speed processing, making it optimized for local development and leading to fast image generation.

- You can easily integrate FLUX.1-dev into your applications via API, available through partners like Replicate and fal.ai.

RedPajama-INCITE-Chat-3B-v1 is a 2.8 billion parameter language model developed by Together, in collaboration with leaders from the open-source AI community. This model is fine-tuned on datasets like OASST1 and Dolly2 to enhance its capabilities in dialog-style interactions, making it a part of the broader RedPajama-INCITE series.

Why is This LLM Model Best for SaaS?

This model is a valuable tool for your company as it provides advanced dialog capabilities for customer service, virtual assistants, or educational tools. Its fine-tuning on specific datasets allows it to perform exceptionally well in chat interactions, making it ideal for applications that require smooth, natural language exchanges.

Here is a breakdown of its features:

- This model performs well on few-shot prompts, offering improved accuracy in classification and extraction tasks, which is beneficial for content moderation and information retrieval.

- This LLM model aids in safely deploying AI that might generate harmful content, helping your company maintain responsible AI use.

Ziya-LLaMA-13B-v1 is a powerful large-scale language model developed by the IDEA-CCNL team. This model has been trained to excel in a wide array of tasks, including translation, text classification, information extraction, programming, summarization, and more.

With a strong language understanding and generation capabilities, Ziya-LLaMA-13B-v1 can handle your complex language processing tasks with reliability and safety.

Why is This LLM Model Best for SaaS?

Whether your SaaS involves machine translation, AI-powered content generation, or language-based educational tools, this model offers strong language understanding and generation abilities. Its bilingual capabilities make it an ideal choice for global applications.

The key features include:

- From translation to automated content generation, this model supports diverse tasks critical for your SaaS platform.

- This model gives you the ability to process both English and Chinese input and output making it valuable if you have multilingual SaaS products.

- Ziya-LLaMA-13B-v1 has gone through extensive training, including human feedback learning, to confirm reliability and safety in various applications.

Llama-2-70b-hf is a highly advanced generative language model developed by Meta. As part of the Llama 2 family, this model is available in a pre-trained version specifically formatted for Hugging Face Transformers. The Llama 2 collection offers you models with varying parameter sizes, including fine-tuned versions tailored for dialogue and conversational use cases.

Why is This LLM Model Best for SaaS?

Llama-2-70b-hf is a versatile tool for your SaaS businesses if it requires powerful natural language generation capabilities. It’s suitable for tasks such as text generation, summarization, and translation, making it ideal for SaaS platforms focused on content creation, customer interaction, or any application requiring sophisticated language processing.

Some of its features are:

- With 70 billion parameters, this model delivers robust performance across a range of natural language tasks.

- The model offers you versatile applications. It can assist you in text generation, summarization, and translation, and is optimized for conversational use cases.

- Llama-2-70b-chat-hf is a fine-tuned variant making it a good choice for building assistant-like chat features with the help of its high-quality conversational abilities.

LL7M is a Llama-like generative text model, specifically optimized for dialogue use cases. This model has been converted for the Hugging Face Transformers format and supports multiple languages. LL7M is designed to handle long conversations with an almost unlimited context length, though optimal performance is recommended within a 64K context length.

Why is This LLM Model Best for SaaS?

LL7M is a great choice if your SaaS business requires robust multilingual capabilities and dialogue optimization. Its ability to manage conversations in multiple languages makes it ideal for developing chatbots and virtual assistants that cater to a global audience.

Additionally, the model’s proficiency in generating coherent and contextually relevant text allows you to use it for content creation tasks.

Key features include:

- LL7M handles English, Chinese, Japanese, and German, making it versatile for global applications.

- This model is capable of creating coherent, contextually relevant text across various content types.

- You can also utilize the multilingual capabilities for text translation between supported languages.

Phi-1.5 is a 1.3 billion parameter model designed with a focus on common sense reasoning in natural language. Despite its relatively small size, Phi-1.5 delivers performance on natural language tasks that rivals models five times larger.

Available on Hugging Face, it was trained using a mix of data sources, including NLP synthetic texts, building on the approach of its predecessor, phi-1.

Why is This LLM Model Best for SaaS?

Phi-1.5 is particularly well-suited for your SaaS business if it relies on natural language processing for tasks such as question-answering, chat interactions, and coding assistance. Its compact size and efficiency make it a great fit for your applications where resource constraints are a consideration, yet high performance is required.

Here are some of its key features:

- This model performs well in tasks involving question-answering and conversational formats, making it ideal for your customer support chatbots or virtual assistants.

- This LLM delivers nearly state-of-the-art results in common sense reasoning and language understanding, comparable to much larger models.

- Phi-1.5 is trained without using potentially harmful web-crawled data which enhances safety and reduces exposure to biases or toxic content.

Mistral-7B-v0.1 is a 7-billion-parameter language model developed by Mistral AI, designed to deliver both high performance and efficiency. This model stands out for its ability to handle real-time applications, making it ideal for scenarios where you need quick and accurate responses.

Why is This LLM Model Best for SaaS?

Mistral-7B is good for your SaaS business if it needs a powerful yet efficient language model for real-time applications. Its ability to excel in different tasks make it a versatile tool for various SaaS platforms. Additionally, the model’s efficiency allows it to deliver rapid responses, crucial for customer-facing applications.

Some of the features include:

- This model outperforms larger models in critical areas like mathematics, code generation, and reasoning, making it a reliable choice for high-stakes applications.

- Mistral-7B can be easily fine-tuned for specific tasks, such as conversation and question answering, allowing your company to tailor the model to your unique needs.

Zephyr-7B Alpha is a versatile AI model built with seven billion parameters, making it a robust and adaptable tool for various tasks. This model has been primarily focused on language during its training, with its performance and usefulness further refined by a training approach called Direct Preference Optimization (DPO). This ensures that Zephyr-7B Alpha delivers accurate and prompt results across different applications.

Why is This LLM Model Best for SaaS?

Zephyr-7B Alpha is a powerful asset for your SaaS companies, offering strong capabilities in conversational AI, content creation, and more. Its ability to understand and generate content makes it particularly valuable for tasks requiring high-quality language processing.

Here is a rundown of its key features:

- Zephyr-7B Alpha can automatically generate code snippets and provide solutions for coding challenges, streamlining the software development process and supporting your developers in writing efficient code.

- The model aids researchers by offering insights, summaries, and explanations on various topics, making it an effective tool for literature reviews and data analysis.

- Zephyr-7B Alpha improves translation accuracy as it can handle idioms and nuances, making it a reliable choice for translation services.

Orca-2-7b is a language model designed primarily for research purposes, focusing on providing single-turn responses for different tasks. This model is particularly strong in tasks that require deep reasoning, making it a valuable tool for researchers exploring advanced AI capabilities.

Why is This LLM Model Best for SaaS?

While Orca-2-7b is built for research, it offers insights that can be valuable for your SaaS business, especially if you are focused on developing AI-driven tools for complex problem-solving and reasoning tasks.

This makes it an ideal foundation for building specialized SaaS applications that require efficient, accurate reasoning in fields like education, finance, or data analysis.

Some key features include:

- The model is optimized for tasks requiring deep reasoning, such as math problem-solving and text summarization, which are essential for SaaS applications in analytical domains.

- Orca-2-7b provides a foundation for developing better frontier models by experimenting with various reasoning techniques, offering potential for innovative SaaS solutions.

DeciLM-7B is a powerful 7.04 billion parameter decoder-only text generation model, recognized for its top performance. Released under the Apache 2.0 license, DeciLM-7B supports an 8K-token sequence length and utilizes the innovative variable Grouped-Query Attention (GQA).

Why is This LLM Model Best for SaaS?

DeciLM-7B is highly suitable for you if you are looking for a versatile, high-performance language model that can be fine-tuned for a variety of tasks. Its superior accuracy and efficiency make it ideal for applications ranging from customer service to complex data analysis.

Here is a list of some of its key features:

- The model excels in handling sequences, outperforming competitors in PyTorch benchmarks, which is crucial for real-time applications.

- Leveraging variable Grouped Query Attention (GQA), DeciLM-7B achieves an optimal balance between speed and accuracy, tailored for demanding SaaS applications.

Whether you want to offer monthly, yearly, or usage-based billing plans, Fungies.io has you covered. Book a demo with Fungies.io today and launch quickly with our ready-made subscription product templates.

Here’s a quick summary snapshot of the 10 LLM models on Higging Face discussed above:

| LLM Model | Key Features | Best For SaaS |

| FLUX.1-dev | – AI-driven image generation- High-speed processing- Easy API integration | Creative SaaS platforms requiring image generation |

| RedPajama-INCITE-Chat-3B-v1 | – 2.8 billion parameters- Enhanced dialog capabilities- Few-shot prompt accuracy | Customer service, virtual assistants, educational tools |

| Ziya-LLaMA-13B-v1 | – Strong language processing- Bilingual (English & Chinese)- Human feedback training | Multilingual SaaS, content generation, translation tools |

| Llama-2-70b-hf | – 70 billion parameters- Versatile NLP tasks- Fine-tuned for conversation | Content creation, customer interaction, sophisticated NLP applications |

| LL7M | – Multilingual support (English, Chinese, Japanese, German)- Coherent text generation | Global chatbots, virtual assistants, content creation |

| Phi-1.5 | – Common sense reasoning- Compact size with high performance- Safe AI deployment | Customer support chatbots, resource-efficient applications |

| Mistral-7B-v0.1 | – 7 billion parameters- High performance in math, code generation, reasoning | Real-time applications, tailored SaaS tools requiring quick responses |

| Zephyr-7B Alpha | – Language & coding generation- DPO training for accuracy- Improved translation accuracy | Conversational AI, content creation, code generation, translation services |

| Orca-2-7b | – Deep reasoning for problem-solving- Single-turn responses- Foundation for research | AI-driven tools for education, finance, data analysis |

| DeciLM-7B | – 7.04 billion parameters- GQA for speed & accuracy- 8K-token sequence length | Real-time customer service, complex data analysis, high-performance SaaS applications |

How to Select an LLM Model for Your SaaS Businesses

Choosing the right LLM (Large Language Model) for your SaaS business is crucial for maximizing its impact. Here’s a structured approach to help you make an informed decision.

Determine Specific Requirements

Start by identifying the specific needs of your SaaS business. For example, if you run a customer service platform, you might need an LLM to handle customer inquiries and automate responses.

On the other hand, if your SaaS focuses on content management, an LLM that excels in generating high-quality articles or product descriptions would be more relevant. Prioritize these use cases based on their strategic importance—like enhancing customer satisfaction or increasing content output efficiency.

Evaluate LLMs Based on Key Criteria

Here are some key metrics to evaluate your LLM mode:

- When evaluating LLMs, consider how well they integrate with your existing systems. If you use Python-based frameworks, check if the LLM supports easy integration with your Python environment.

- Another key factor is scalability. This means that if you anticipate a surge in user demand, you’ll need an LLM like DeciLM-7B, known for its superior sequence handling, to maintain performance during peak times.

- Lastly, consider ROI. an LLM like Zephyr-7B Alpha, which supports multilingual capabilities, might offer more value if you plan to expand into international markets.

Researching Models on Hugging Face

You can also explore the best LLM models on huggingface. For instance, if you need a model for code generation, look into reviews for models like Code Llama. Read through the documentation and check community feedback to understand how well these models perform in real-world scenarios.

A review might also reveal that Mistral-7B is particularly effective for real-time applications, making it a strong candidate if your SaaS needs quick response times.

Test and Validate LLMs for SaaS Applications

After choosing an LLM, run small-scale experiments. In case you selected Orca-2-7b for its reasoning capabilities, test it by implementing it in a live customer support scenario to see how well it handles complex inquiries.

Analyze the results by measuring response accuracy and user satisfaction. If the initial deployment shows gaps, iterate by fine-tuning the model using specific customer interaction data to improve its performance before a full-scale rollout.